Automated pain recognition

Using artificial intelligence to improve pain management

Psychologists from Ulm and their cooperation partners have developed algorithms for automated pain recognition in order to be able to dose painkillers more precisely. The researchers are now looking for industrial partners to take their project to application.

When the face of the person in front of us is distorted by pain, it is immediately clear that they are suffering, and are possibly unwell. However, every individual feels pain differently, which makes recognizing the severity of the pain much more difficult. It is even more difficult, even for specialist staff, to determine the degree of pain in patients who are unable to express themselves or who do so poorly, such as children, dementia sufferers or unconscious patients in intensive care.

How to interpret emotions

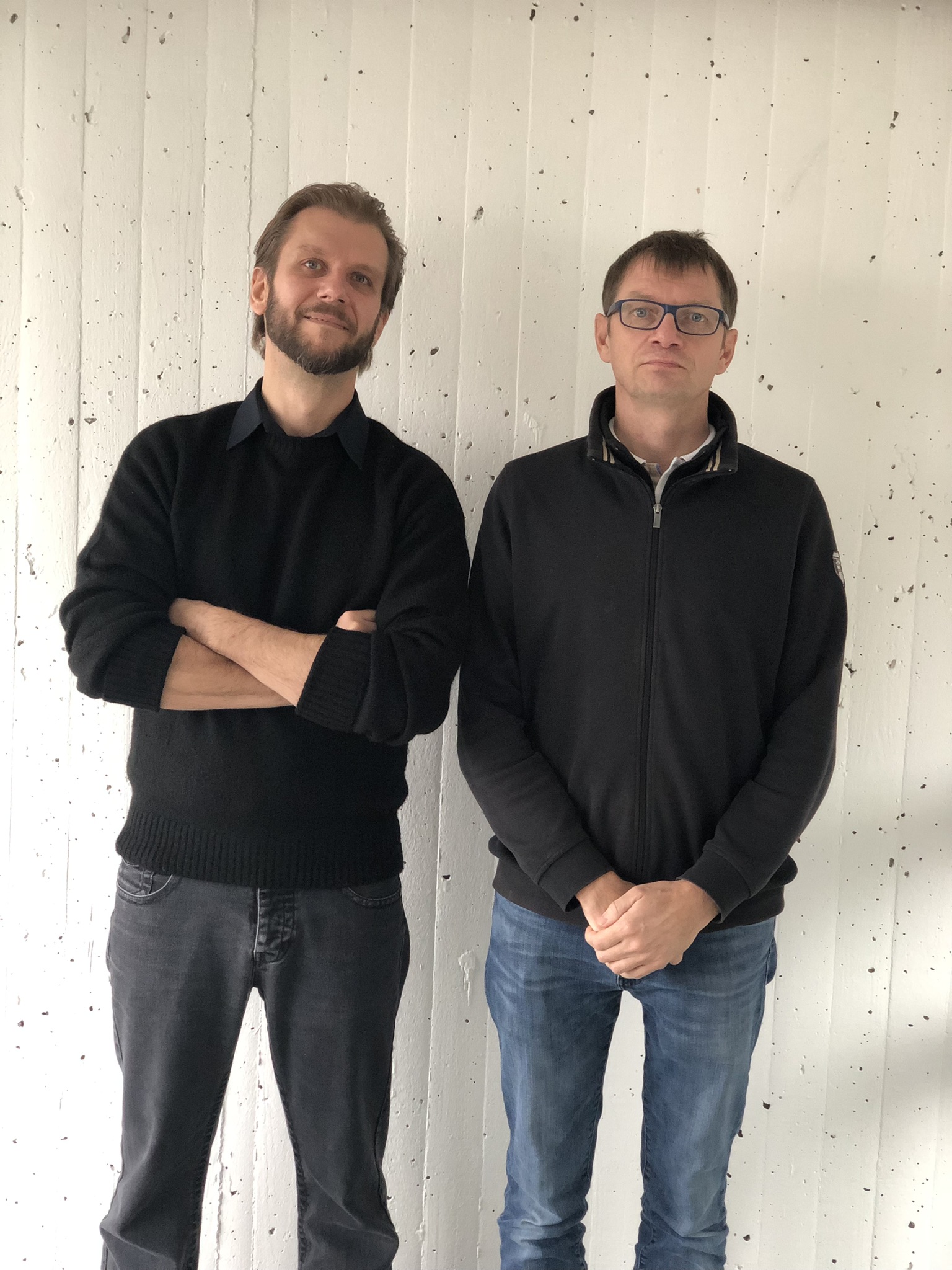

Pain researchers Dr. Sascha Gruss (left) and Prof. Dr. Steffen Walter (right) are looking for industrial partners for automated pain recognition. © University of Ulm

Pain researchers Dr. Sascha Gruss (left) and Prof. Dr. Steffen Walter (right) are looking for industrial partners for automated pain recognition. © University of UlmThe numerical rating scale (NRS) is one of the methods that can be used to assess pain. It allows individuals to rate their pain on a numerical scale from 0 to 10. Another method of measuring pain involves the use of questionnaires or pain diaries. Prof. Dr. Steffen Walter, head of the Medical Psychology Section at Ulm University Hospital, explains a problem that often occurs using such subjective measures: "The problem is that it is difficult to dose analgesics, i.e. pain-relieving drugs, on the basis of this imprecise information. When patients are prescribed too few pain killers, they are left in too much pain and they suffer. This can lead to cardiovascular problems, or pain chronification. Alternatively, the patients might get way too many pain killers, causing drug dependence or respiratory depression, dizziness or severe perception and consciousness disorders." Multimodal automated pain recognition may be the solution to this problem, which is why pain researcher Steffen Walter turned to affective computing 14 years ago: recognizing emotions using artificial intelligence (AI) is possible in a wide variety of areas and situations. Basic emotions such as fear and joy can be recognized, but also complex states such as stress. AI can be used in areas such as robotics, telemedicine, psychotherapy or even pain recognition.

Physiological parameters in focus

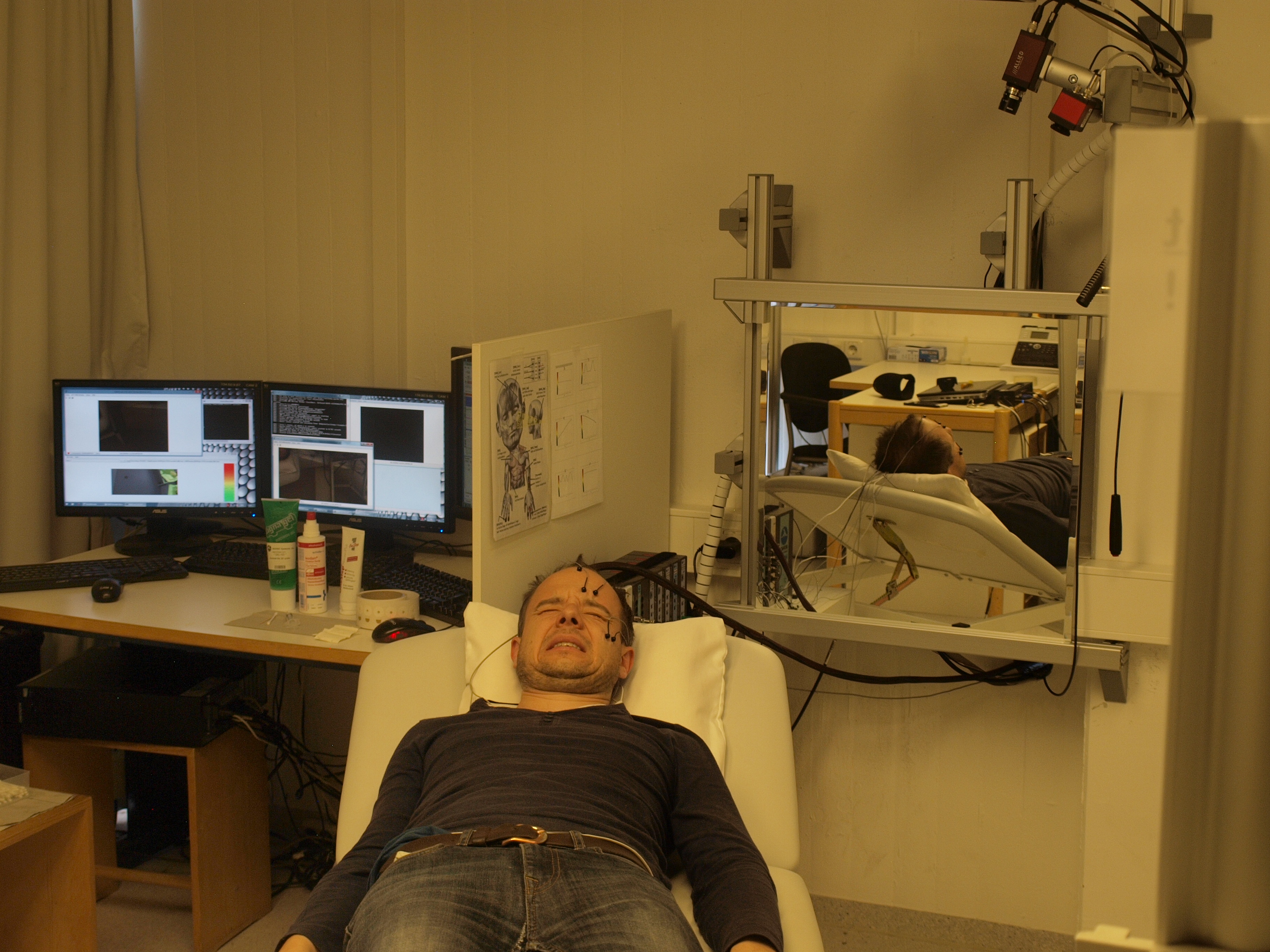

Researchers record a volunteer’s pain under controlled conditions. © University of Ulm

Researchers record a volunteer’s pain under controlled conditions. © University of UlmWay back in 2012, Walter launched the project "Multimodal Automated Pain Recognition" with numerous cooperation partners (see infobox below). The project deals with clinical pain measurement for which mimic and psychobiological parameters are recorded. As part of the project, volunteers were selectively exposed to painful stimuli and a wide variety of parameters were recorded. "Basically, all parameters associated with these observational scales are interesting. For example, we record facial expressions, i.e. the muscles of the face, gestures and especially physiological parameters, such as skin conductance," says Walter. Skin conductance, which is the measurement of the electrical conductivity of the skin, is influenced by sweat secretion and measured on the finger. Skin conductance increases when there is excitation, for example due to pain. Other parameters include electromyography and cardiovascular parameters, such as ECG and blood volume pulse. "All of these aforementioned parameters are relevant in biofeedback measurements," Walter says. "We also record skin temperature and respiration and, as another modality, paralinguistics, which takes the form of groans and moans, or firm exhalations and inhalations," adds Dr. Sascha Gruss, who, having studied economics, turned to human biology 13 years ago, gaining his doctorate in this field.

AI is trained

The AI is now trained for automated pain recognition using the parameters measured. "AIs are mathematical algorithms that can be trained on an existing dataset. The aforementioned parameters are included in the dataset, so that it is clear which pattern must be present in the biosignals when pain occurs, for example high skin conductance or muscle tension. Once the AI has learned the new algorithms, it is able to apply them to an unknown biosignal. The AI can then say with a certain probability that the patient is feeling pain at the time the measurement is carried out," Gruss reports. Until now, automated pain recognition has mostly used single parameters such as skin conductance. The researchers from Ulm have specifically chosen an approach with multiple modalities in order to avoid incorrect results, which often occurs with just one parameter. "In our case, information fusion plays a major role. The numerous signals measured flow into an algorithm with the goal of obtaining a curve on a monitor that represents how pronounced the pain is," says Gruss. Incorrect results could be eliminated by combining the parameters. That said, a practical application that is both flexible and fast should not focus on too many parameters.

Industrial partners sought

The researchers from Ulm are now looking for industrial partners for precisely this type of application. The AI can now recognize the threshold for pain tolerance with a probability of 90 percent. As regards the pain threshold, a recognition rate of about 70 percent is possible. Following successful clinical trials in intensive care, the researchers are looking for industrial partners to develop a patient monitor prototype that can display a pain intensity curve.

Gruss explains that for the AI to be able to recognise pain in real time, a lot of programming is necessary. "We can imagine integrating our pain module into an existing system, so that this information would also support the examining physician," says the human biologist, adding that "this integration requires the use of interfaces, which in turn need to be identified as part of data protection requirements."

And of course, the development of the AI is not finished yet. In a later clinical application, the data will continue to flow into the algorithm so that it is constantly improving. Gruss comments: "There is no text-book approach for recognising a patient’s pain. We want an AI that is able to cover peripheral areas as well."

"Multimodal automated pain recognition" project

- Project duration: 2012 to 2020

- Funding: Deutsche Forschungsgemeinschaft - German Research Foundation (DFG)

- Cooperation partners:

- Prof. Dr. Eberhard Barth, Department of Anaesthesiology and Intensive Medicine, Ulm University Hospital

- Prof. Dr. Ayoub Al-Hamadi and Philipp Werner, Institute for Information Technology and Communications IIKT, University of Magdeburg

- Prof. Dr. Oliver Wilhelm and Mattis Geiger, Differential Psychology and Psychological Diagnostics, University of Ulm

- Dr. Adriano Andrade, Biomedical Engineering Laboratory BioLab, Federal Universiy of Uberlandia, Brazil

- PD Dr. Friedhelm Schwenker, Institute of Neuroinformatics, University of Ulm

- Prof. Dr. Magrit-Ann Geibel, Department of Oral and Maxillofacial Surgery, Ulm University Hospital